Deep Learning Tuning Playbook by Google Brain Engineers

In the slew of mushrooming free advice on machine learning without context and a recipe it is rare that applied use-case guides are…

In the slew of mushrooming free advice on machine learning without context and a recipe it is rare that applied use-case guides are available written by the professionals themselves. A team of scientists from Harvard and Google Brain has joined hands to tackle practical problems, especially hyperparameter tuning.

This document is for engineers and researchers (both individuals and teams) interested in maximizing the performance of deep learning models. Authors assume basic knowledge of machine learning and deep learning concepts.

Their emphasis is on the process of hyperparameter tuning. They touch on other aspects of deep learning training, such as pipeline implementation and optimization, but the treatment of those aspects is not intended to be complete.

It is assumed that machine learning problem is a supervised learning problem or something that looks a lot like one (e.g. self-supervised). That said, some of the prescriptions in this document may also apply to other types of problems.

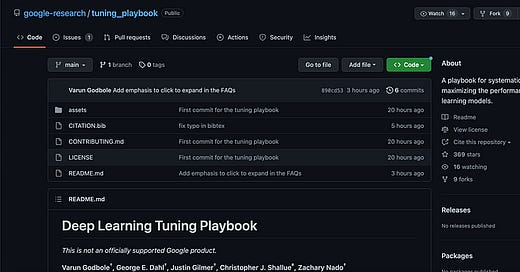

Deep Learning tuning playbook is an eldorado land for serious engineers looking to optimize their models, available for free on their Github profile. Authors Varun Godbole, George E. Dahl†, Justin Gilmer†, Christopher J. Shallue‡, Zachary Nado have done a great job addressing practical problems at google without actually quoting it.

Chapters include:

Guide for starting a new project

Choosing the model architecture

Choosing the optimizer

Choosing the batch size

Choosing the initial configuration

A scientific approach to improving model performance

The incremental tuning strategy

Exploration vs exploitation

Choosing the goal for the next round of experiments

Designing the next round of experiments

Determining whether to adopt a training pipeline change or hyperparameter configuration

After exploration concludes

Determining the number of steps for each training run

Deciding how long to train when training is not compute-bound

Deciding how long to train when training is compute-bound

Additional guidance for the training pipeline

Optimizing the input pipeline

Evaluating model performance

Saving checkpoints and retrospectively selecting the best checkpoint

Setting up experiment tracking

Batch normalization implementation details

Considerations for multi-host pipelines

A must-read for serious engineers motivated enough to get their hands dirty!

https://github.com/google-research/tuning_playbook#who-is-this-document-for